Web Crawler

Dynamic web crawler that scrapes data from a website and saves it to a database.

Client: Smart Life Projects

Project Details

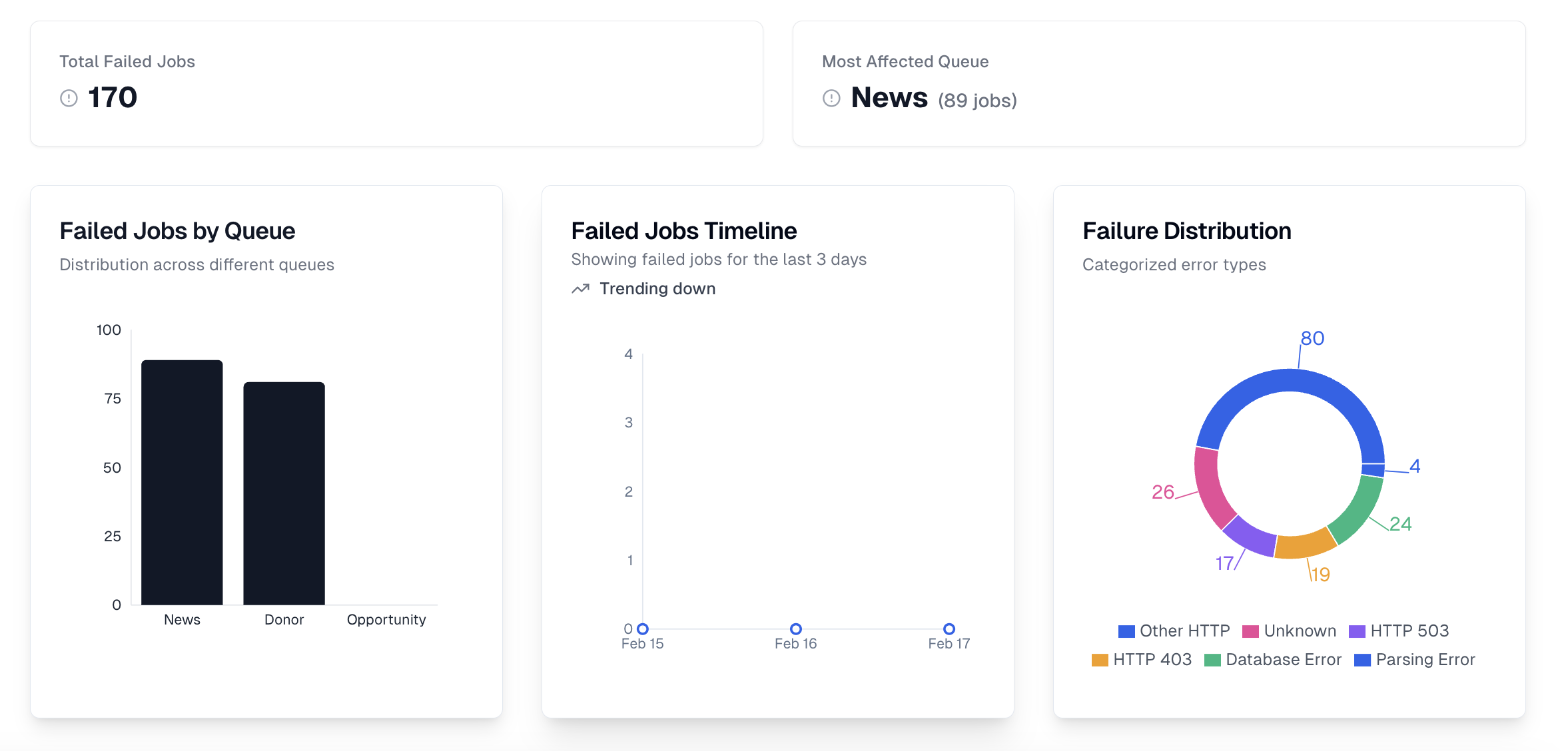

The Web Crawler project is a sophisticated data extraction tool designed for Smart Life Projects. The frontend, built with Next.js, TailwindCSS, and Shadcn UI, provides an intuitive interface for managing and visualizing the crawled data. The backend combines Flask and Python, utilizing Selenium and BeautifulSoup for efficient web scraping. Data processing is handled with Pandas, and the extracted information is stored in a Supabase database. The entire system is containerized using Docker and deployed on a Hostinger VPS, with infrastructure managed through Terraform and Ansible for easy scaling and maintenance. This project demonstrates our expertise in creating complex, distributed systems that combine web scraping, data processing, and modern web technologies.